Today famous auto companies such as Tesla and Waymo use a hyper-realistic virtual world for training an autonomous vehicle (AV). These are powered by data and serve as ideal driving schools for trying out extremely dangerous driving scenarios.

So far, these data-driven softwares are proprietary and are not available for public use.

Now, MIT’s Computer Science and AI Laboratory (CSAIL) has issued an open-source, data-driven simulation engine that deters the requirement of an expensive and intricate model-based simulator.

Named VISTA 2.0 (Visual Image Simulation Transfer for Autonomy, version 2.0) the engine is a simulator and a training program that evaluates and controls perception of a driverless car.

Being a data-driven platform means that full self-driving potential is obtained in a real world environment using pre-collected, and on-spot sensor data (fixated on the AV) as training data.

All of the data is combined in a way to create a 3D virtual world within VISTA, guiding a virtual vehicle to take next steps in real time as the physical vehicle moves along the trajectory.

Compared to a model dependent approach (that uses 3D drawings from human artists), the data driven simulator by MIT gracefully covers the simulation to reality (sim-to-real) gap from hyper photorealism, and an accurate transfer of driving control policy in the real-world.

Inside VISTA

VISTA 2.0 (hereafter VISTA) is a sequel of the first version (VISTA 1.0) and utilizes two additional sensors on the AV; Lidars and event-based camera. Together with an RGB camera, the three sensors help the AV to navigate at night time when illumination conditions are dark.

There are two aspects to the VISTA simulator. First is the database where real-world data is placed. This data serves in offline training of the neural network and consists of narrowly crashed, and devastating footage from CCTV cameras placed across roads, offering learning to avoid edge scenarios.

Second key point in VISTA’s working is that it uses three sensors; RGB camera, Lidar, and event-based camera to sense local viewpoints in real time and transform into the 3D environment.

Together these two data-driven properties of VISTA enable a comprehensive training scenario translating to better control accuracy and easy learning of near-crash scenarios.

How does VISTA simulator make AV to learn without a virtual environment as in model-based simulators?

VISTA doesn’t rely on human artists to create intricate 3D virtual environments limited in their use case. It rather builds in itself a 3D virtual world from the real-world data it provided through a database.

To enhance the diversity of the environment, VISTA uses multiple sensors to synthesize novel viewpoints within the environment to facilitate real-time training of the neural network.

Together with the RGB camera, both Lidar and event-based sensors capture local view in real time, sending the data to the back engine of the controller.

Within VISTA, the data is translated into a 3D virtual environment within which a virtual car is positioned and possible movements are tested in real time.

At this point the human-collected training data is utilized in the 3D environment and helps to find the closest next observation. Once estimated, this information is sent back to the AV in the physical world for guiding an action on the road setting.

How Lidar and Event-based cameras support the simulator?

Lidar is an acronym for Light Detection and Ranging that uses light pulses to detect presence of and estimate distance from objects. It emits human-eye safe light pulses with a rate of millions pulses per second.

Lidar finds its applications in autonomous technology due to its capability to accurately measure depth and geometric information of objects.

The third sensor, an event-based camera, is different from a normal shutter camera and uses a pair of special cameras to detect brightness changes in an animated scene. Its specialty is that each pixel of the camera captures brightness independently from every other pixel.

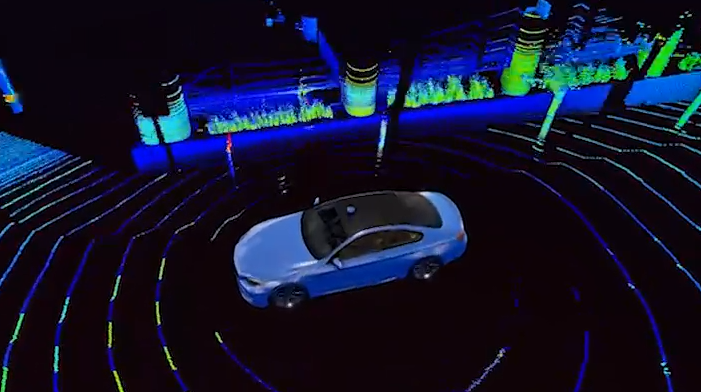

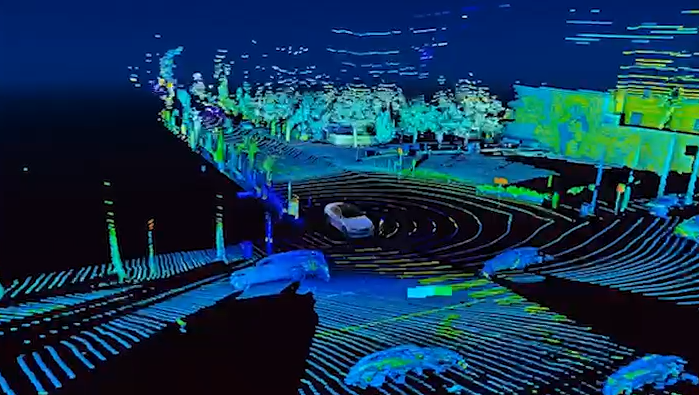

The above images demonstrate a vehicle employing Lidar on road. Light beams are dispersed 360 deg across the environment to detect presence of objects in real-time/Velodyne Lidar

No doubt, VISTA is a holy grail for the research community that will take self-driving car technology to the next level.

Make your own self-driving car!

Come and try the open source code and build your first self-driving car. Training data set and the self-driving python code is available here!

Want to build your first AI model?

Join hundreds of others at Dice Analytics and register for a 2-months short course* on AI and Deep learning.

*Flexible pricing and amazing discounts!