A machine learning model can be deceiving. As an example, consider an object detection scenario where a model is tasked to identify cancerous cells. The model shows 95% accuracy, but as you may examine later that despite being close to 100% accurate, it has taken unimportant features in its decision. This holds a case of correct prediction with wrong reasons.

In a similar flow, there’s another model behavior that raises concerns on reliability of a machine learning algorithm – it might be that the model fails to identify the cancerous cells despite picking the right features (wrong prediction).

A logical reason is that, during the the training phase, the assignment of inaccurate labels to features forces the model to take irrelevant or incomplete features as primary features to reach a decision.

A machine learning model is only trusted if it gives the assurity that it will reach an outcome the same way a human would do to make a decision.

But how to know if a model looks at things same way as humans?

A research team of MIT in collaboration with IBM researchers designed a method that aligns a model behaviour with human decision making. The method is named as Shared Interest and works by comparing saliency of an ML output with human annotated ground truth information.

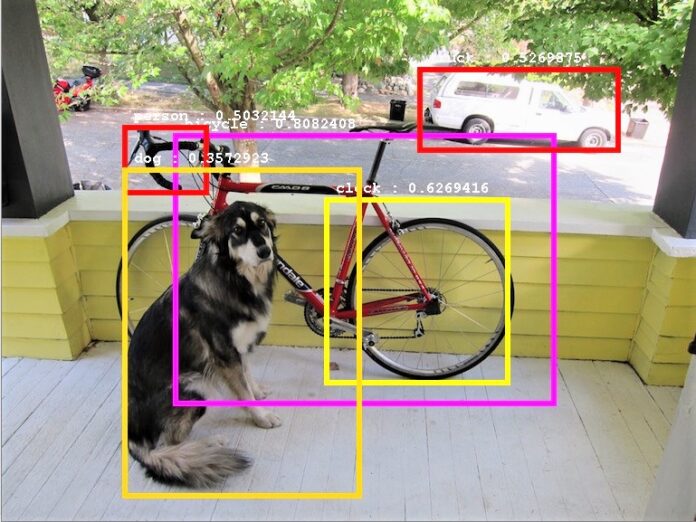

Saliency of an ML decision refers to those features that are important in the decision making of the model. We can visually highlight the saliency in case of image or textual data.

By comparing saliency of an mL output with ground truth data (human annotation), Shared Interest aims to compute Alignment of model’s decision with that of human’s

Advantage: Human annotated information could not be compared on a large scale prior to Shared Interest. With this method users can now rank and sort images based on eight recurring patterns of the model behavior.

How Shared Interest helps in evaluating the faithfulness of an AI?

Shared Interest quantifies three alignment parameters. These are the:

- Intersection over Union (IoU); which computes the similarity between saliency and ground truth information.

- Ground Truth Coverage (GTC); which computes how strictly the model relies on all ground truth information.

- Saliency Coverage (SC); which computes how strictly the model relies only on ground truth information.

Experiment: The team trained a ResNet-50 neural network with Computer Vision (CV) dataset- ImageNet. The dataset carries a total of 14 million images on daily life objects such as dogs and vehicles. In a separate experiment a recurrent neural network- RNN was trained with a Natural Language Processing (NLP) data set- BeerAdvocate. This dataset carries 1.5 million reviews on beer.

Result: On performing several iterative experiments the team identified eight recurring patterns that conclude important model behaviors.

We have summarized these eight patterns (only for the CV experiment) in the following graphic. Each pattern is based on the Shared Interest alignment parameters, model results, and visual representation of the saliency and ground truth.

With this combination, Shared Interest derives important model behaviors at different instances that could then be used by analysts to improve the model performance.

Note: Saliency (S) and Ground truth information (G) is presented visually with dark orange boundary and yellow bounding box respectively.

For an in depth analysis of the Shared Interest results, please visit the source publication at the end of this article.

Challenge: Shared Interest method is the first of its kind but has its own limitations. These include the need to engineer manual annotations by humans.

However the kind of results it generates to support decision making in critical applications, it’s a breakthrough step towards enabling risk-free AI in real world scenarios.

Watch the video presentation

Sources

MIT, IBM research of aligning model’s behavior with that of human: Shared Interest official research material.

Freethink writes on MIT, IBM research: Shared Interest in an insightful article: Can we trust an AI?- Shared Interest tells how