Tuning- or optimization as we commonly say, could be an enormously daunting task when it comes to deep neural networks (artificial neural networks with a large number of hidden layers). Technology advances within the industry of Artificial Intelligence are focused on improving the efficiency of these complex training requirements for such infinite-width ANNs.

When training your AI model, hyperparameter tuning (hypertune) is an optimization technique that works by specifying a variable (hyperparameter metric) of your choice that you intend to optimize, thus improving the predictive accuracy of your neural network.

Recently, Microsoft Research in collaboration with OpenAI came up with a new hypertune technique which it calls as μ-Transfer, and which has increased the optimization speed of a neural network training.

Information about this new hyperparameter technique ‘μ-Transfer’ has been disseminated via a paper, titled ‘Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer’ along with a Pytorch written code for those who want to use it right away.

This paper is an extension to Microsoft Research program the ‘Tensor Programs’ since its inception in 2020. A technique called μ-Parameterization was then introduced for ANNs with an ‘infinite-width limit’.

Successfully as of now, the technique has been tested on a massive ANN called GPT-3 which occupies ginormous network parameters of 6.7 billion. The results of the experiment are unbelievably splendid with a fast optimization speed of just 7% of the optimization used to be applied before the μ-transfer.

A Look into Tuning of ANNs

If this is not your first time learning about ANNs, then you probably know that there are two kinds of settings a neural network will require, first, the selection of parameter values, and second, the selection of hyperparameter values. Both these variable-categories shall be set to some values before you start to tune your model.

The parameters are the nodes, also known as ‘weights’, and carry a value presenting the level of effect which it has on the “final prediction”.

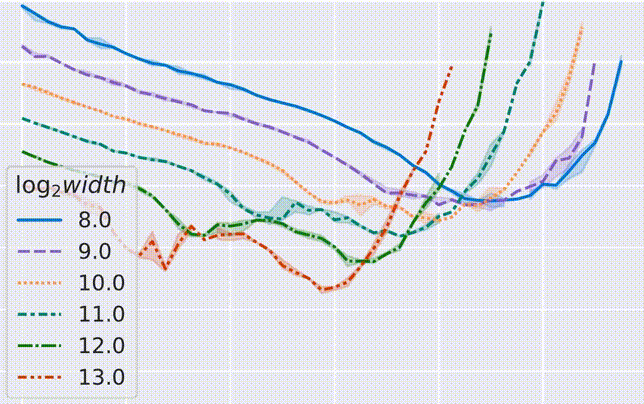

Hyperparameter present the depth and width of your neural network, that is, depth as the total number of hidden layers between the input and output layer, and width as the total number of nodes within a single layer.

The Computational-Expensive Hypertuning

Since ANNs by definition are self-learning algorithms, and require no human-assistance in building a data model, as previously done for conventional machine learning algorithms, these artificial networks use trial-and-error technique to select optimal values for themselves.

Given the trial-and-error technique, a user sets a range of values for hyperparameters. Based on this range, a trial is initiated and subsequent trials are run using guessed values of hyperparameters (based on results from previous trials). In the end, that hyperparameter value is picked that shows the best result. That’s how ANN tunes its hyperparameters!

Unfortunately, the trial-and-error technique is very slow especially when the width of ANN is large (carrying millions and billions of parameters). Industry experts are continuously putting efforts in creating advanced techniques to enable speedy and accurate feature learning in an ‘infinite-width limit’.

The ‘μ-Transfer’ offers the ability to reduce this trial-and-error cost by speeding up the rigorous process of training which is especially important for huge networks like GPT-3.

Colin Raffel, co-creator of the T5 and assistant professor at Computer Science, University of North Carolina, shared his thoughts on the breakthrough:

“µP provides an impressive step toward removing some of the black magic from scaling up neural networks. It also provides a theoretically backed explanation of some tricks used by past works, like the T5 model. I believe both practitioners and researchers alike will find this work valuable,”